Table of Contents

Use Cases of TensorFlow Lite For On-Device ML Capabilities

What if your phone or smartwatch could process AI tasks instantly, without needing to connect to the cloud, saving time, and keeping your data private?

That’s exactly what LiteRT — Google’s high-performance runtime for on-device AI delivers. Formally known as TensorFlow Lite, LiteRT is the next breakthrough in Machine Learning, bringing faster, smarter and more efficient AI to devices.

Whether you are building next-gen AI/ML apps or embedding intelligence into hardware, nosedive into the real benefits and the trend setting use cases of TensorFlow Lite.

TensorFlow Lite For Low Latency, Offline, On-Device ML

LiteRT is built for real-world, on-device ML (ODML). It is super optimized to address the common hurdles developers face when developing AI on mobile and embedded devices.

For instance, you want to add a smart feature to your app like recognizing images or understanding spoken commands, without relying on a suitable internet. LiteRT helps developers run ML models right on the user’s device, making the functionality faster and more efficient.

That’s not all! Let’s break down why TensorFlow Lite sparks as a highly useful and demanding framework for integrating on-device AL and ML capabilities.

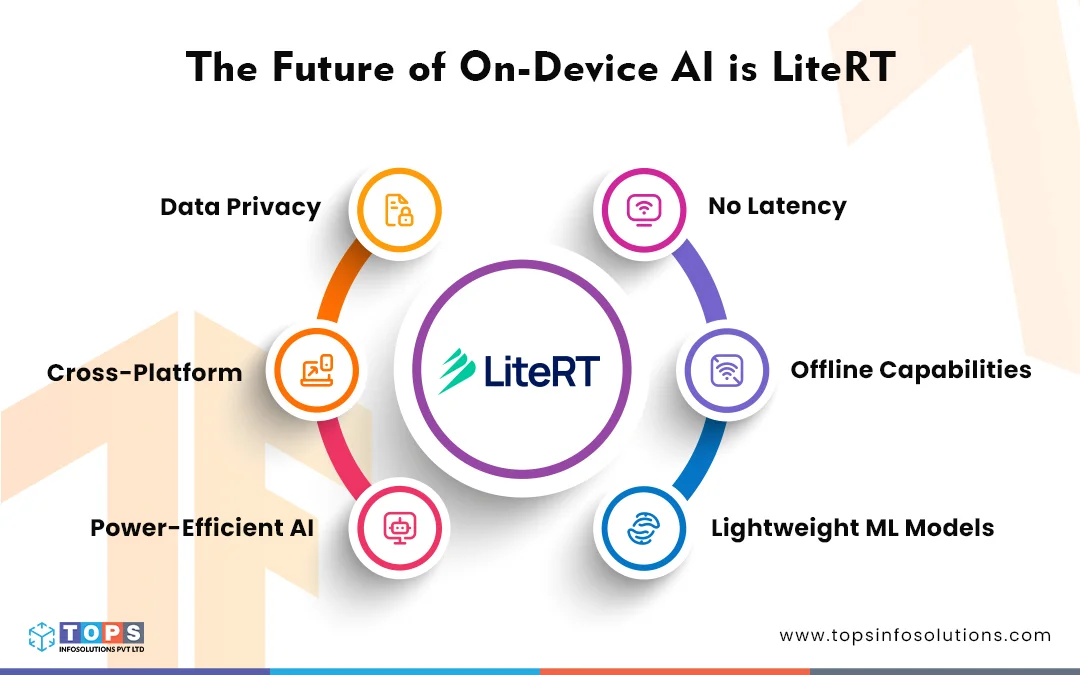

Why is TensorFlow Lite the Future of Mobile Intelligence?

The riches of TensorFlow Lite isn’t just theoretical, it’s changing the game in real world applications. Here are a few jaw dropping benefits of TensorFlow Lite to explore the possibilities of on-device ML functionalities that help users access ML services in the app with minimal use of the internet. A lot more is possible with this framework. Check it out.

No Latency Instant Results

Because LiteRT runs everything directly on the device, there is no need for a round trip to the Cloud. It helps your AI models process data on the spot, without any delay — whether it’s image classification, face detection, voice recognition, or predictive text.

Offline Capabilities

You can use Machine Learning features without needing a constant internet connection, which is perfect for users on the go. TensorFlow Lite is highly used to build apps that need to work in remote areas or for users on the go who don’t always have reliable access to the internet.

Tiny Models, Big Impact

TFLite reduces the size of models and binary files, making it perfect for devices with limited storage and processing power. Think of smartwatch, fitness tracker, surveillance apps, or any other IoT device — LiteRT helps run AI tasks smoothly, even in small, resource-constrained environments.

Your Data Stays Private

With LiteRT your data never leaves your device. Since no external servers are involved, you can rest assured that your data is secured and your privacy is protected. This is a huge advantage, especially for apps handling sensitive information like healthcare, fitness, or location.

Cross-Platform

Whether you are developing Android, iOS, or IoT devices, TensorFlow Lite works seamlessly across platforms. It’s adaptive and versatile, making it easy to integrate with mobile apps, embedded systems, and edge devices.

Read More: Future of Cross-Platform Development with React Native in 2024

Power-Efficient AI

TensorFlow Lite is designed to run Machine Learning models with minimum power consumption. By eliminating the need for constant network connections, it helps extend the battery life of mobile devices and embedded systems.

Ready-To-Run LiteRT Models and Multiple Framework Support

LiteRT makes it easier by offering a library of ready-to-run models for common Machine Learning tasks like language processing, image recognition, and more. But that’s not all – LiteRT also supports conversion tools that allow you to bring in models from other popular frameworks like TensorFlow, JAX, PyTorch, and more.

Using AI Edge conversion tools, developers can quickly optimize and convert these models into TensorFlow Lite format, making them lightweight and efficient for on-device use.

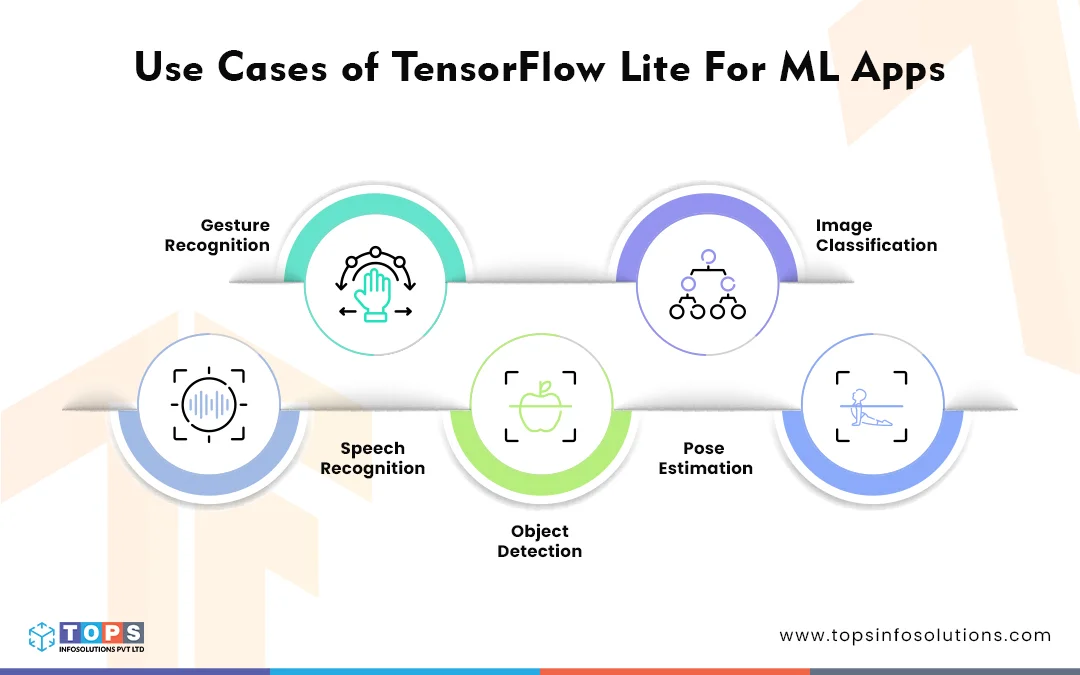

TensorFlow Lite Use Cases For Building Future-Ready Machine Learning Apps

The real curiosity arises when you explore what LiteRt can do. Here are a few ways it is already transforming everyday experiences.

Gesture Recognition

Have you ever used your phone to recognize a swipe, wave, or tap? TensorFlow Lite can help apps recognize these gestures in real-time. This makes the user interface more intuitive, allowing for smooth navigation and a better overall experience.

A series of steps include recognizing and gathering sensor data when gestures are performed, using this data to train the ML model, converting the model into TensorFlow Lite format, and integrating this format into the mobile app.

Image Classification

Heard of an app that can identify objects in photos instantly? TensorFlow Lite enables mobile apps to classify images using pre-trained models. This automates various tasks in multiple fields like retail apps that can recognize products or healthcare apps that analyze medical images.

With a series of steps which include obtaining a pre-trained model and converting it into TensorFlow Lite format, integrating this format into the mobile app, input images preprocessing, and smoothly running the interface on the model, developers can leverage ML and AI on mobile and embedded devices. This helps build intelligent and innovative mobile apps that can classify and analyze the Images.

Speech Recognition

With TensorFlow Lite, developers can build apps that understand voice commands and convert spoken words into text instantly. This is a game-changer for virtual assistance and transcription apps, making them more efficient and user-friendly.

The steps include gathering speech data as audio files and labeling them with corresponding text, helping the model learn the relationship between sounds and words. This data is used to train the ML model to recognize the audio signals and map them in text. The trained ML model is converted to TensorFlow Lite format, preprocessing the audio input and running the model effortlessly.

Object Detection

TensorFlow Lite is used to build mobile applications that can detect and locate multiple objects in images or videos. This technology is highly useful for security applications like surveillance, in retail for managing inventories, and in a wide range of niched business use cases.

A series of steps include training your own object detection model using annotated images or pre-trained models, converting this model into the TensorFlow Lite format, and integrating it into the mobile app.

Pose Estimation

TensorFlow Lite is highly used to build next-gen apps for fitness and gaming. It helps apps detect human poses in real-time from images and videos based on the user’s movements, creating an interactive and fun experience.

The steps to integrate pose estimation capabilities involve using a pre-trained pose estimation model, converting it into a TensorFlow Lite format, and integrating it into the mobile app.

TensorFlow Lite Simplifying AI Development For Everyone

Ready to turn your apps into intelligent systems that think on their own? With TensorFlow Lite the future of mobile machine learning is already here. Let’s explore some of its demanding applications.

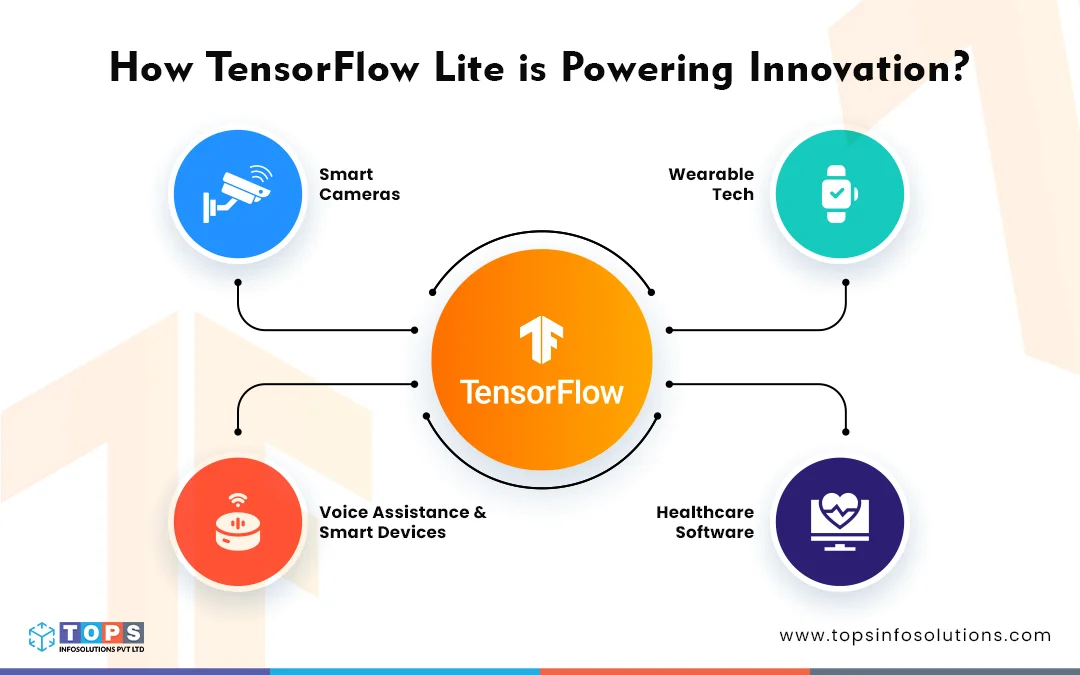

Smart Cameras

From instant object detection to advanced photo editing, LiteRT enables powerful image processing right on your device. Think about the AR filters, face recognition, or real-time object tracking, all without needing Cloud support.

Wearable Tech

Health monitoring devices and fitness trackers rely on TensorFlow Lite to analyze motion, detect heart rates, and deliver personalized health insights, all while consuming very little power.

Voice Assistance And Smart Devices

With LiteRT’s ability to process voice commands in real-time, your smart home assistance can become faster and more responsive even offline.

Healthcare Software

The Medical Imaging apps can now analyze data directly on the device, allowing for faster diagnoses in areas with limited connectivity. Be it scanning X-rays or monitoring vital signs, LiteRT opens the door to real-time healthcare solutions.

Building Intelligent Apps Has Never Been Easier

Whether it’s automating tasks, enhancing app performance, or creating entirely new functionalities, the possibilities with TensorFlow Lite are endless.

TFLite’s simple architecture makes it easy to convert and optimize existing models for on-device use. Plus, with the pre-trained models available, developers can quickly get started on building intelligent apps that don’t just react, but smartly adapt in real-time.

The TensorFlow Lite framework also provides tools like GPU acceleration, and model quantization to further enhance performance, reducing the size of ML models without compromising accuracy. It brings cutting-edge ML right into the heart of mobile and IoT innovation.